Tightening the Verification of PAC Bounds using Formal Verification Results

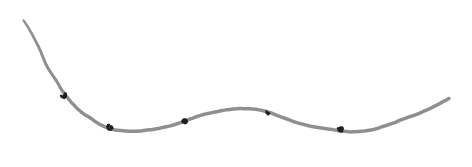

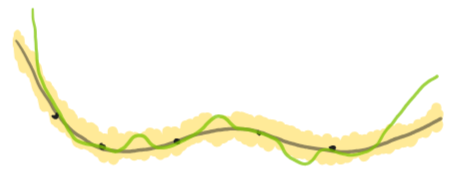

In this paper, we wanted to formalise the following intuition. Suppose you have a neural network that you want to learn the following distribution.

Of course, we do not have access to this distribution, otherwise, why would we need to learn it? Instead, we have access to some training data sampled from this distribution.

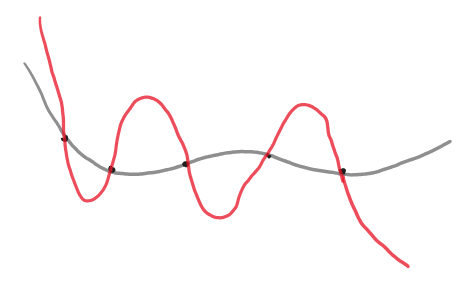

Typically, neural networks are over-parameterised so they can in theory learn to memorise the data. In this case, the neural network's representation is unlikely to generalise in areas further away from the training data.

Probabilistic generalisation bounds give a probability of how large the error of the neural network's representation is against the underlying distribution. Therefore, they need to account for the possibility that the neural network has just memorised the training data, and thus they are fairly loose.

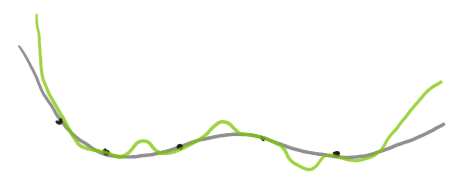

In practice, it is observed that the representations that the neural networks learn generalise fairly well, despite having the capacity to memorise the training data.

However, until now we had no way of incorporating this observation into the derivation of probabilistic generalisation bounds. Our intuition was that finding a way to incorporate this into the derivation of probabilistic generalisation bounds would lead to substantially tighter generalisation bounds. Indeed, if the neural network representation is generalised to unseen data one would expect that it has captured some aspect of the underlying distribution of the training data.

We were motivated to work on this problem since this could lead to substantially tighter generalisation bounds that could then be used to certify the performance of neural networks.

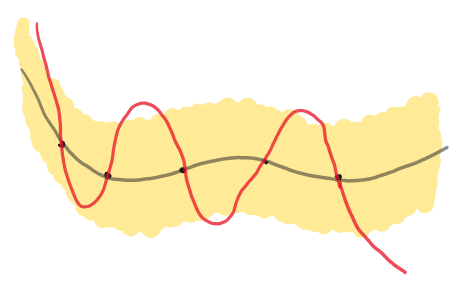

The approach we used to incorporate the observation that neural networks generalise well into the derivation of generalisation bounds was inspired by formal verification methods. Methods of formal verification test the robustness of neural networks. That is, they can be used to determine how sensitive the network performance is to changes in the input data. More specifically, for each training point, we can determine the maximum perturbation we can make to it whilst still getting the neural network to behave correctly. This means that within this region of perturbation around a training point, we can be sure that the neural network's representation of the data matches that of the underlying distribution.

With this formalisation of the generalisation observation, we successfully obtain substantially tighter generalisation bounds for neural networks. Thus we formally verify our intuition that robust neural networks have a larger generalisation capacity.

We chose this approach since there is substantial work on formal verification methods. Hence, the theoretical consequences of our work could be realised in practice. However, it remains to be seen exactly how these improvements in the bounds will manifest in practice. Since our generalisation bounds are probabilistic, with this work we aim to initiate a shift in perspective in the formal verification community, from one of verifying spatially larger perturbations to one of verifying probabilistically larger perturbations.